It has hardly ever been enough for Google just to be able to censor content on YouTube and apps in its store – “curating” and, critics say, essentially editorializing what users can see when they use Google Search has been high on the list of priorities for a while.

(Article by Didi Rankovic republished from ReclaimTheNet.org)

Coincidentally or not, in the year of US midterm elections, the giant is ramping up this effort to make sure the search engine isn’t simply returning results – like people might still expect it to do – but what Google decides are “trustworthy results” as opposed to “falsehoods and misinformation.”

Google’s self-styled standard of what passes the trustworthiness test is described in the vaguest of terms, ostensibly so that a lot of things can fit that definition: it’s when the behemoth’s systems “don’t have high confidence in the overall quality of the results.”

Considering the power that Google Search has because of its dominant market share in the western world, any tweaking of algorithms putting yet more obstacles in the way of the engine returning organic search results is a notable event, where access to information and freedom of speech is concerned.

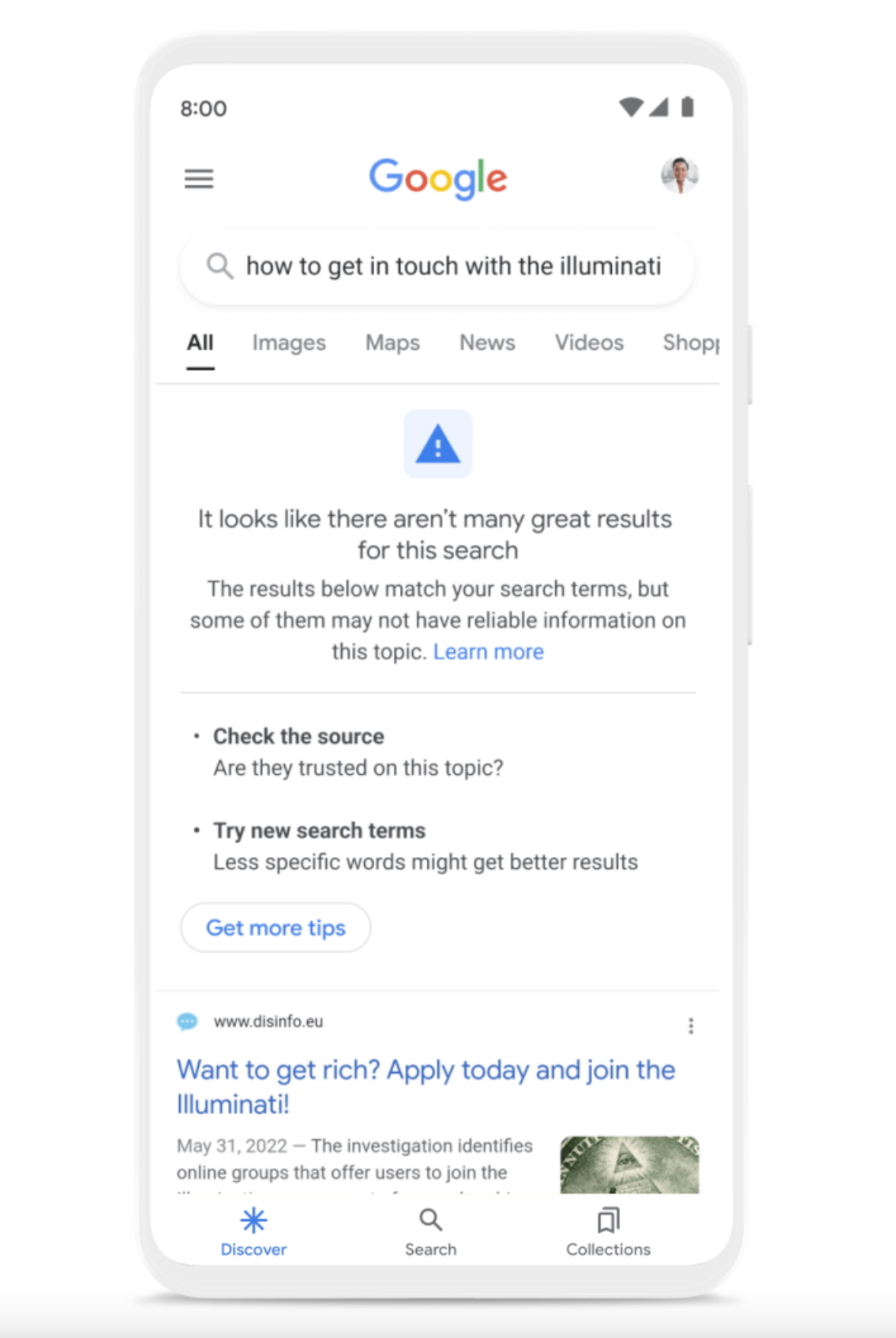

This time, Google is introducing what it calls content advisories. Google says the goal is to help users “understand and evaluate” high-quality information, and also promote what it calls information literacy. And the way this editorialized by Google information is presented to users to “evaluate” is fairly aggressive: on mobile devices, these advisories are full-screen, displayed above the fold where they are most visible.

“It looks like there aren’t many great results for this search,” the advisory will read.

The goal here is to nudge users very strongly to abandon the search that Google effectively labels as untrustworthy. Another “tweak” Google announced affects featured snippets that highlight a particular source; inclusion in these snippets means that a site gets a lot of traffic.

Google is not even pretending that it returns results that are most relevant to the search in an impartial and uninvolved way, but now “AI” will be used to downrank “unwanted” information and sites that slip through by 40% and make sure they get minimum if any benefit from snippets.

On its blog, the corporation said that its systems are already trained to identify and prioritize signals which it has decided indicate that content is “authoritative and trustworthy.”

Read more at: ReclaimTheNet.org

Please contact us for more information.